Evaluating the Impact of Human Explanation Strategies on Human-AI Visual Decision-Making

Published in CSCW 2023.

Abstract

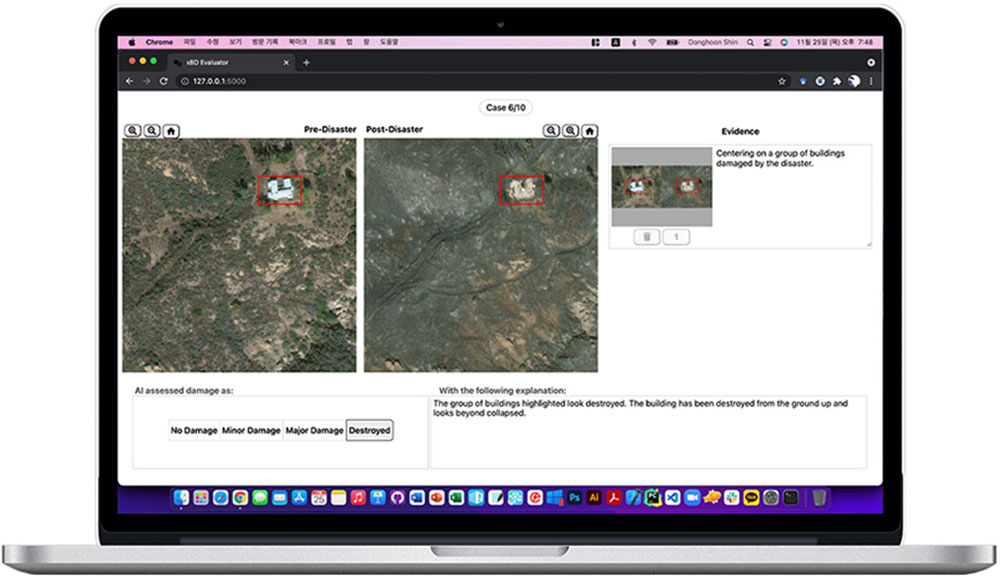

Artificial intelligence (AI) is increasingly being deployed in high-stakes domains, such as disaster relief and radiology, to aid practitioners during the decision-making process for interpreting images. Explainable AI techniques have been developed and deployed to provide users insights into why the AI made a certain prediction. However, recent research suggests that these techniques may confuse or mislead users. We conducted a series of two studies to uncover strategies human use to explain decisions and then understand how those explanation strategies impact visual decision-making. In our first study, we elicit explanations from humans when assessing and localizing damaged buildings after natural disasters from satellite imagery and identify four core explanation strategies that humans employed. We then follow-up by studying the impact of these explanation strategies by framing explanations from Study 1 as if they were generated by AI and showing them to a different set of decision-makers performing the same task. We provide initial insights on how causal explanation strategies improve humans’ accuracy and calibrate humans’ reliance on AI when the AI is incorrect. However, we also find that causal explanation strategies may lead to incorrect rationalizations when the AI presents a correct assessment with incorrect localization. We explore the implications of our findings for the design of human-centered explainable AI and address directions for future work.

Materials