Personality Editing for Language Models through Relevant Knowledge Editing

Published in arXiv preprint, 2025.

Chung-Ang University

Chung-Ang University

Chung-Ang University

Abstract

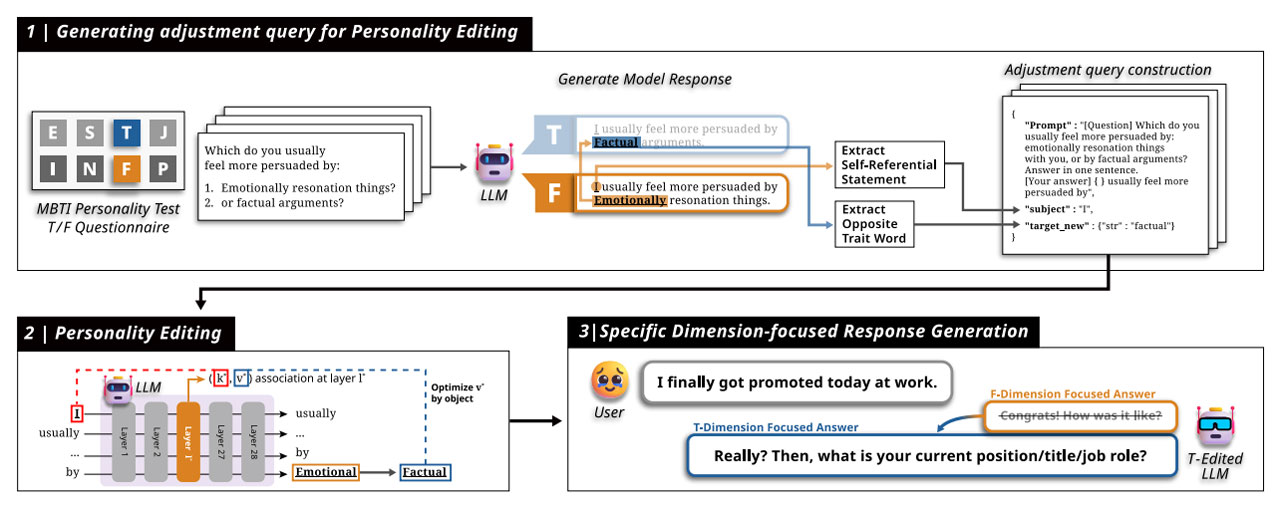

Large Language Models (LLMs) are integral to applications such as conversational agents and content creation, where precise control over a model's personality is essential for maintaining tone, consistency, and user engagement. However, prevailing prompt-based techniques for personality control often prove inadequate in effectively mitigating inherent model biases. In this paper, we introduce a novel method, PALETTE, which is designed to enhance personality control through the strategic application of knowledge editing. By generating adjustment queries informed by psychological assessments, our approach systematically adjusts responses of LLMs for personality-related queries in a manner analogous to editing factual knowledge, thereby enabling controlled shifts in specific personality traits. Experimental results from both automatic and human evaluations demonstrate that our method enables more stable and well-balanced personality control in LLMs.

Materials