Personality Editing for Language Models through Adjusting Self-Referential Queries

Published in EACL 2026.

Chung-Ang University

Chung-Ang University

Chung-Ang University

Abstract

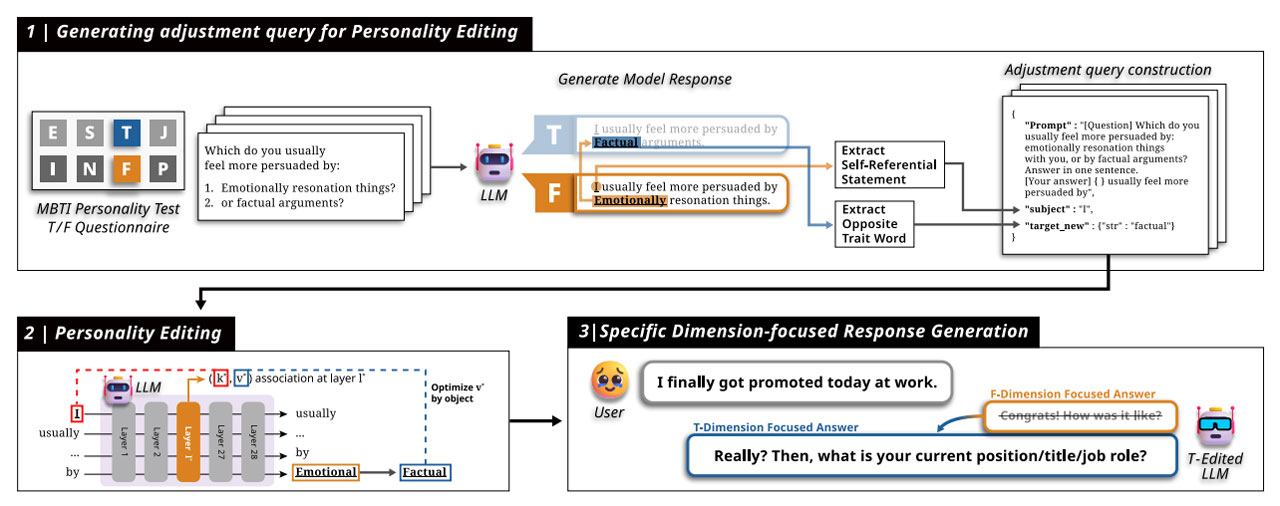

Large Language Models (LLMs) are integral to applications such as conversational agents and content creation, where precise control over a model’s personality is essential for maintaining tone, consistency, and user engagement. However, prevailing prompt-based or fine-tuning approaches either lack robustness or demand large-scale training data, making them costly and impractical. In this paper, we present PALETTE (Personality Adjustment by LLM SElf-TargeTed quEries), a novel method for personality editing in LLMs. Our approach introduces adjustment queries, where self-referential statements grounded in psychological constructs are treated analogously to factual knowledge, enabling direct editing of personality-related responses. Unlike fine-tuning, PALETTE requires only 12 editing samples to achieve substantial improvements in personality alignment across personality dimensions. Experimental results from both automatic and human evaluations demonstrate that our method enables more stable and well-balanced personality control in LLMs.

Materials